The Problem with Classical RAG

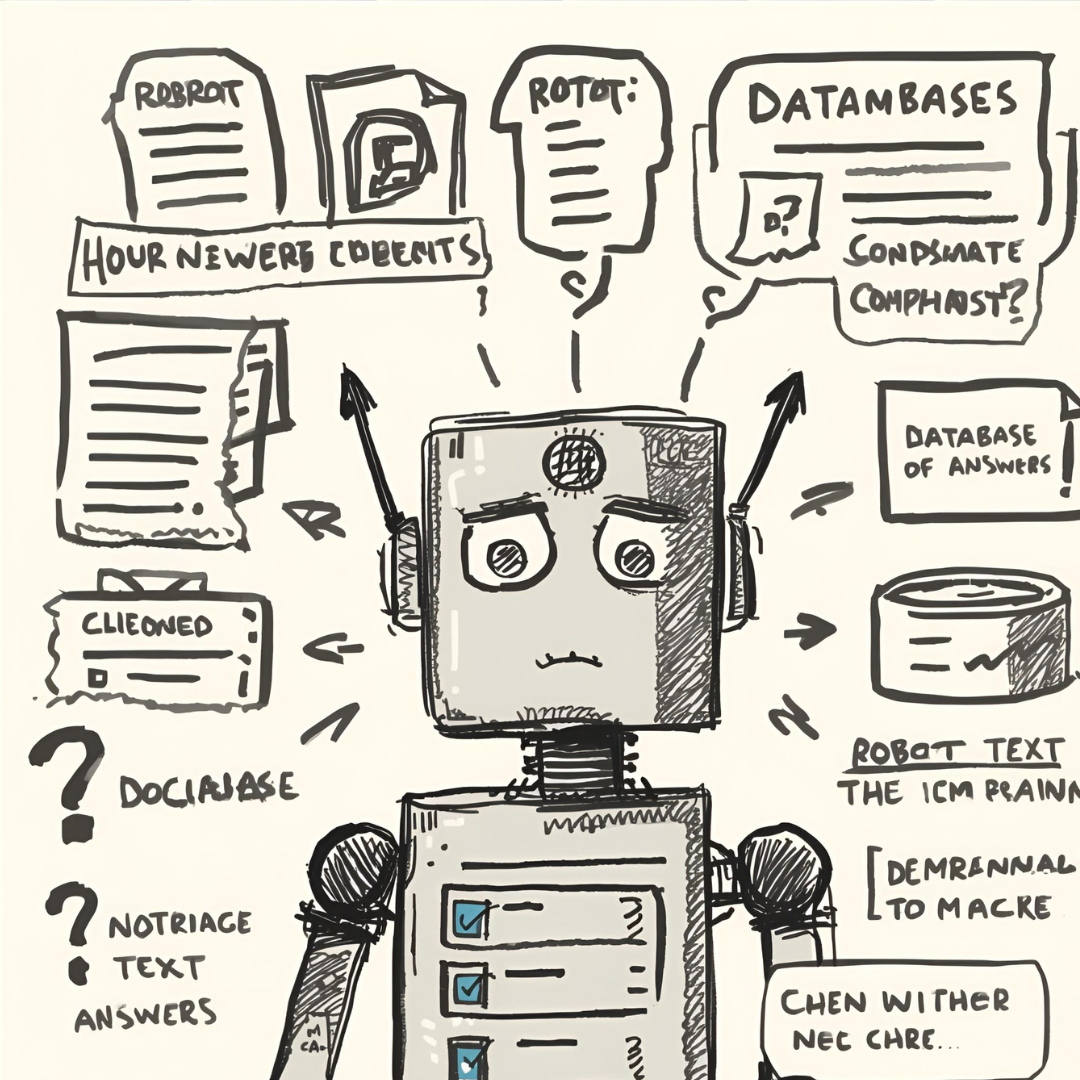

In many user-facing applications, such as documentation search or user manuals, the goal is to retrieve relevant text while matching user intent. Traditional RAG (Retrieval-Augmented Generation) approaches combine text indexing through embeddings with semantic search, but this creates a fundamental problem: we are indexing the answers, not the questions.

Classical RAG indexes answer passages rather than user queries, creating potential misalignment. When a user phrases their question differently than how the answer was originally represented in the index, relevance suffers.

The Solution: ICT-QEI

ICT-QEI (Inverse Cloze Task for Question-Embedding Indexing) represents a paradigm shift in how we approach document retrieval.

Inverse Cloze Task (ICT)

Text becomes a source for generating questions rather than storing passages. Models learn to associate generated questions with corresponding answer text. This inverts the traditional approach where we store answers and hope queries match.

Question-based Retrieval

The index is built from generated questions. User queries search for semantically similar questions and retrieve matching answer passages. The key advantage is that both queries and indexed content are expressed as questions, improving alignment with user intent.

Key Challenges

Two primary difficulties emerge when implementing this approach:

1. Chunking Strategy

Text must be split logically and contextually, not merely by size. Poor chunking leads to questions that don't capture the full context of the answer.

2. LLM Generation Cost

Producing diverse, quality questions requires significant inference investment. Each chunk needs multiple questions to cover different ways users might ask about the content.

When to Use This Approach

Question-based indexing is recommended for high-value structured documents like contracts or compliance manuals, where preprocessing costs justify improved retrieval precision. For casual documentation or frequently changing content, classical RAG may still be more practical.

The investment in preprocessing pays off when:

- Document accuracy is critical (legal, compliance, medical)

- Users ask questions in varied and unpredictable ways

- The corpus is relatively stable over time

- Failed retrievals have significant business impact

Conclusion

The shift from answer-based to question-based indexing represents an important evolution in RAG systems. By aligning the index structure with how users actually query, we can significantly improve retrieval accuracy for critical applications.